Multinomial Logistic Regression in R

Published:

)](https://raw.githubusercontent.com/JakeJing/jakejing.github.io/master/_posts/pics/multi-logistic-reg-surface.png)

Now we can generalize the binary classification tasks into multiple classes, and build a multinomial logistic regression (MLR) model. If you want to refresh your understanding of binary logistic regression, pls refer to my previous blog. MLR is also known as multi-logit regression, polytomous LR, multi-class LR, softmax regression, multinomial logit, maximum entropy (MaxEnt) classifier, conditional maximum entropy model. In this blog, I will simulate some data with given parameter $\beta$ and recover the parameters of the MLR model via stan. Before that, it is important to know the differences (e.g., activation function) between binary and multinomial logistic regression models.

1. Softmax function vs. sigmoid function

The major difference between binary vs. multi-class logistic regression is the transformation function (sigmoid vs. softmax). The sigmoid function is used to convert the linear combination $X\beta$ into probabilities, whereas the softmax function is used to get the probabilities of possible outcomes. As you may expect, softmax is an extension of sigmoid function to multi-class cases. For mathematical proof of their relatedness, pls see this issue in stackexchange and this course material. I give a short summary of their equivalence here.

In the binary logistic regression, the predicted probabilities via sigmoid function is given as:

\[\begin{split} p(Y_i = 1 | X_i, \beta) &= \frac{1}{1 + e^{-X_i\beta}} \\ p(Y_i = 0 | X_i, \beta) &= \frac{e^{-X_i\beta}}{1 + e^{-X_i\beta}} \end{split}\]In the multinomial logistic regression with $K = 2$, the predicted probabilities via softmax function is:

\[\begin{split} p(Y_i = 1 | X_i, \beta) &= \frac{e^{X_{i}\beta_k}}{\sum_{k=1}^{K} e^{X_{i}\beta_k}} \\ &= \frac{e^{X_{i}\beta_1}}{e^{X_{i}\beta_0} + e^{X_{i}\beta_1}} \\ &= \frac{e^{X_{i}\beta_1}/e^{X_{i}\beta_1}}{(e^{X_{i}\beta_0} + e^{X_{i}\beta_1})/e^{X_{i}\beta_1}} \\ &= \frac{1}{1 + e^{-X_{i}(\beta_1 - \beta_0)}} \\ p(Y_i = 0 | X_i, \beta) &= \frac{e^{X_{i}\beta_0}}{e^{X_{i}\beta_0} + e^{X_{i}\beta_1}} \\ &= \frac{e^{X_{i}\beta_0}/e^{X_{i}\beta_1}}{(e^{X_{i}\beta_0} + e^{X_{i}\beta_1})/e^{X_{i}\beta_1}} \\ &= \frac{e^{-X_{i}(\beta_1 - \beta_0)}}{1 + e^{-X_{i}(\beta_1-\beta_0)}} \end{split}\]Let $\beta = \beta_1 - \beta_0$, you will turn the softmax function into the sigmoid function.

Pls don’t be confused about softmax and cross-entropy. Remember that softmax is an activation function or transformation ($\mathbb{R} \rightarrow p$) and cross-entropy is a loss function (see the next section). In the multinomial logistic regression, cross-entropy loss is equivalent to the negative log likelihood of categorial distribution.

2. Cross-entropy loss

Both binary and multinomial logistic regressions use cross-entropy loss, though MLR generate the loss function into multiple classes. As mentioned in my previous blog about logistic regression, the minimization of binary cross-entropy loss is equivalent to the maximum likelihood estimation (MLE) of Bernoulli distribution. For the multi-class classification tasks, the cross-entropy loss is the same as the MLE of categorical distribution.

The cross-entropy loss or negative log likelihood of categorical distribution is defined as:

\[\begin{split} L(\beta, x, y) &= L(\hat{y}, y) \\ & = \frac{1}{N}\sum _{i=1}^{N}H(p_{n},q_{n})\\ &=-{\frac {1}{N}}\sum _{i=1}^{N} \sum _{k=1}^{K} y_{ik}\log {\hat {y}}_{ik} \\ &= -{\frac {1}{N}}\sum _{i=1}^{N} \sum _{k=1}^{K} y_{ik}\log (\text{softmax}(x_i\beta)) \\ &= -{\frac {1}{N}}\sum _{i=1}^{N} \sum _{k=1}^{K} y_{ik}\log p_{ik} \\ \end{split}\]where $\text{softmax}$ transforms the results of $x_i\beta$ into probabilities, and $y_{ik}$ is the probability of the $i^{th}$ observed data in $k^{th}$ class. The estimated probability of $i^{th}$ observation in $k$ class is represented as $p_{ik}$. Take $K = 3$ as an example, when the observed data belong to the $1^{st}$ class, we will get $y_{i1} = 1$ and the rest $y_{i2} = y_{i3} = 0$. This gives the following loss function.

\[L(\beta, x, y) = -{\frac {1}{N}}\sum _{i=1}^{N} y_{i1}\log p_1 + y_{i2}\log p_2 + y_{i3}\log p_3\]For the MLE of categorical distribution and its analytic solution of $\theta$, pls refer to this answer in StachExchange. This shows that MLE of $\theta$ in the categorical distribution will converge to the probability of each category.

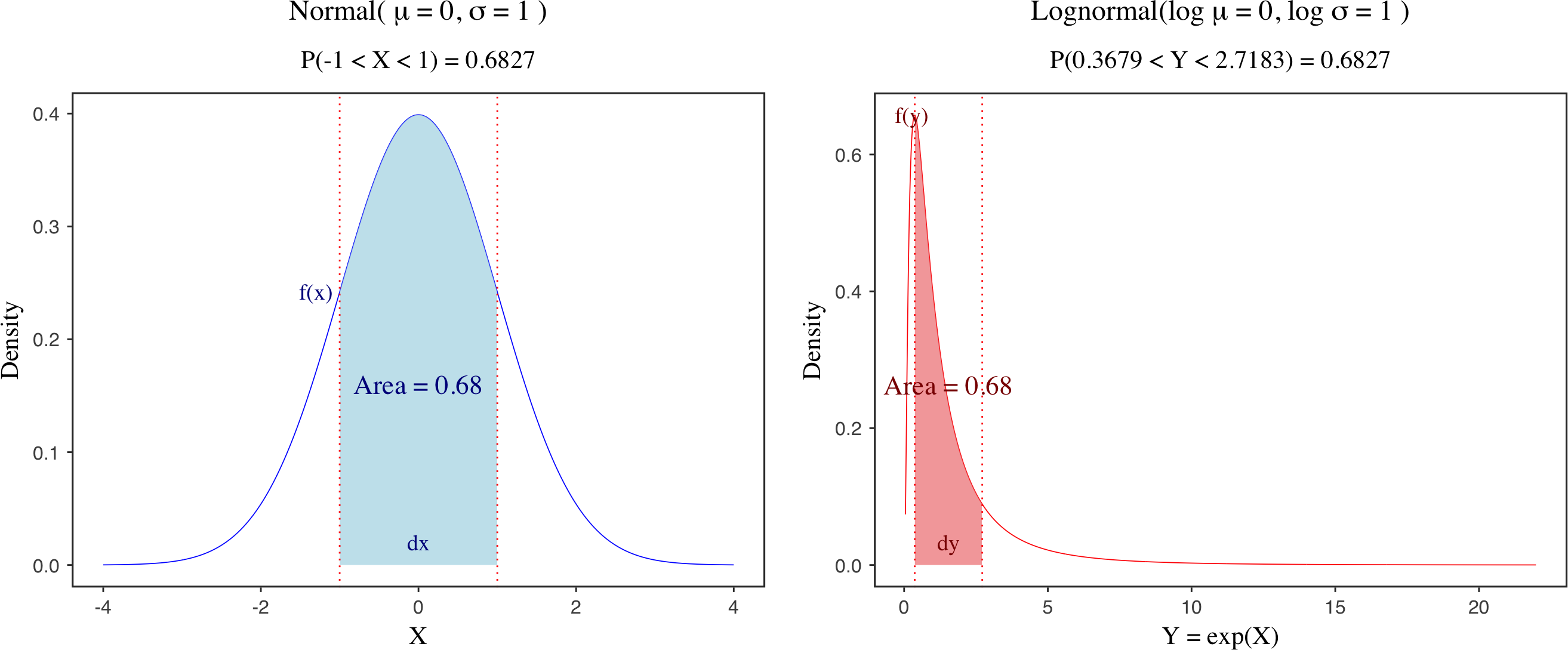

3. Form

\[\begin{split} y &\sim Categorical(p_{i, k}) , ~ i = 1, ..., N\\ p_{i,k} &= \frac{e^{z_{i, k}}}{\sum_{k=1}^{K} e^{z_{i, k}}}, ~ \sum_{k=1}^{K} p_{i,k} = 1\\ z_{i, k} &= X_{i, j}\beta_{j, k} \\ \beta_{j, K} &= 0 \\ \beta_{j, k} &\sim \mathcal{N}(0, 10), ~ j = 1, ..., J, ~ k = 1, ..., K-1 \end{split}\]where the input data $X$ is a $N * J$ matrix with $N$ observations $J$ number of features or predictors, and the parameter matrix $\beta$ is a $J * K$ matrix with $K$ number of classes. We take the $K^{th}$ class as the reference group, and therefore $\log(\frac{p(Y_i = K)}{p(Y_i = K)}) = X\beta = 0$. This means the parameter matrix $\beta$ for the $K^{th}$ class will be 0. The softmax transformation is applied to each row of the outcome $z$, so as to get the probability matrix $p$. In the probability matrix, each row is a simplex (sum up to 1). See the graphical representation of the multinomial logistic regression in this link.

)](https://raw.githubusercontent.com/JakeJing/jakejing.github.io/master/_posts/pics/multinomial_logistic.png)

Consider an example of 3 classes, we will have the following equations.

\[\begin{split} \log(\frac{p(Y_i = 1)}{p(Y_i = 3)}) &= X\beta_1 \Rightarrow p(Y_i = 1) = p(Y_i = 3)e^{X\beta_1} \\ \log(\frac{p(Y_i = 2)}{p(Y_i = 3)}) &= X\beta_2 \Rightarrow p(Y_i = 2) = p(Y_i = 3)e^{X\beta_2} \\ \end{split}\]Given $p(Y_i = 1) + p(Y_i = 2) + p(Y_i = 3) = 1$, we can get $p(Y_i = 3)$.

\[\begin{split} p(Y_i = 3) &= 1 - p(Y_i = 1) - p(Y_i = 2) \\ p(Y_i = 3) &= 1 - p(Y_i = 3)e^{X\beta_1} - p(Y_i = 3)e^{X\beta_2} \\ p(Y_i = 3) &= \frac{1}{1 + e^{X\beta_1} + e^{X\beta_2}} \end{split}\]Then we can bring $p(Y_i = 3)$ back to the above equations, and get $p(Y_i = 1)$ and $p(Y_i = 2)$. Here I summarize the probabilities for each class below. You may find that these probabilities are quite similar to the softmax function. Remember that we set the $3^{rd}$ class as the reference, this gives us $e^{X\beta_3} = 1$, since $\log(\frac{p(Y_i = 3)}{p(Y_i = 3)}) = X\beta_3 = 0$. By replacing 1 with $e^{X\beta_3}$, we will get exactly the same form of softmax function. This also indicates that the parameter matrix $\beta$ is estimated with respect to the last reference group.

\[\begin{split} p(Y_i = 1) &= \frac{e^{X\beta_1}}{1 + e^{X\beta_1} + e^{X\beta_2}} \\ p(Y_i = 2) &= \frac{e^{X\beta_2}}{1 + e^{X\beta_1} + e^{X\beta_2}} \\ p(Y_i = 3) &= \frac{1}{1 + e^{X\beta_1} + e^{X\beta_2}} \end{split}\]If you are interested in pair-wise comparisons between groups, you may know that $\beta_1$ indicates the difference in log odds between group 1 and group 3, and $\beta_2$ measures the difference in log odds between group 2 and group 3. The difference in log odds between group 1 and group 2 is: $\log(\frac{p(Y_i = 1)}{p(Y_i = 3)}) - \log(\frac{p(Y_i = 2)}{p(Y_i = 3)}) = \log(\frac{p(Y_i = 1)}{p(Y_i = 2)}) = X(\beta_1 - \beta_2)$. This means $\beta_1 - \beta_2$ gives us the difference between group 1 and group 2.

4. Data

We simulate the data by providing the parameter matrix $\beta_{j,k}$, which corresponds to $J$ features and $K$ classes. Since we take the $3^{rd}$ class as the reference, the last column of the $\beta$ matrix should be 0. The values in each row specify the parameters of intercept, $x_1$ and $x_2$ for each class, separately. The true functions for multinomial LR are $\log(\frac{p(Y_i = 1)}{p(Y_i = 3)}) = 1 -2x_1 + 3x_2$ and $\log(\frac{p(Y_i = 2)}{p(Y_i = 3)}) = -0.5 +4x_1 - 1.5x_2$.

In contrast to the binary LR, the parameters for $x_1$ and $x_2$ only measure the log odds ratio with respect the reference class. It cannot directly tell us the relationship with the probability of the class. In the case of binary LR, if the parameter for $x_1$ is positive, it suggests that the increase of $x_1$ would lead to higher $p(Y_i = 1)$. It is possible to interpret in this way, since the increase of $\log(\frac{p(Y_i = 1)}{p(Y_i = 0)})$ can directly imply that $p(Y_i = 1)$ over $p(Y_i = 0)$ is becoming bigger. However, we cannot directly interpret like this in MLR, since the increase of log odd ratio with respect to certain class doesn’t mean that the probability of getting the class is becoming higher. To get the relationships of predictor $x$ and the probability of each class $p(Y_i = k)$, we need to visualize the model results.

N <- 1000

J <- 3 # number of predictors (intercept, slp1, slp2)

X <- cbind(1, rnorm(N, 0, 1), rnorm(N, 0, 1)) # N * J

K <- 3 # num of classes

beta <- rbind(c(1, -0.5, 0),

c(-2, 4, 0),

c(3, -1.5, 0))

# J * K (features * classes) with the last class as the reference

# [cls1_intercept, cls2_intercept, cls3_intercept]

# [cls1_x1, cls2_x1, cls3_x1 ]

# [cls1_x2, cls2_x2, cls3_x2 ]

z <- X %*% beta

# rowwise softmax transformation

probs <- t(apply(z, 1, function(x) exp(x) / sum(exp(x))))

y <- apply(probs, 1, function(x) rcat(n = 1, prob = x))

5. Model

6. Run the analysis

data_mlogist <- list(N = N, X = X, J = J, K = K, y = y)

fit_mlogist <- sampling(stan_mlogist, data = data_mlogist,

chains = 2, iter = 2000, cores = 2, thin = 5)

7. Visualization

Note that the predicted surface for the reference class e.g., $p(Y_i = K)$ does not necessarily form a logistic regression surface, since its relationship with the predictor $x$ is not estimated directly, but relies on the equation $p(Y_i = 1) + p(Y_i = 2) + p(Y_i = 3) = 1$.

Useful links: